The Real Tech Revolution in the Legal Profession (WORDS x NUMBERS)

Much has been said about Artificial Intelligence and its impact in the legal profession, some futurologists even claiming that the profession will be totally replaced by robots and/or computers in the future and this has caused a “frisson” and aroused much insecurity in many professionals.

Much has been said about Artificial Intelligence and its impact in the legal profession, some futurologists even claiming that the profession will be totally replaced by robots and/or computers in the future and this has caused a “frisson” and aroused much insecurity in many professionals.

I do not agree with this categorical statement, but I do agree with the revolution that those new technologies are now imposing. In my humble opinion, I believe that this technological wave will indeed make a revolution, not only in the legal profession, but in all professions called human and that still heavily rely on the interaction of human beings in order to be exercised.

First of all, we must dismember the term “Artificial Intelligence”, which is nothing else than a series of technological advances that are enabling the application of several theories and statistical models, some of them already existing for many years.

Let us start with the Moore’s law.

According to Moore’s prediction, in 1975, the processors (chips) would double their capacity every 12 months, with their size halved and this fact has been verified over all those years and must reach a physical limit of size (measured in nanometers) around 2025. This exponential increase in the processing capacity has allowed what was once unthinkable, mainly when it comes to words, images and video, which requires a “muscle” greatly superior to that needed in order to process numbers.

Another very important item relates to the time that industry in general dedicated itself to the development of tools for the processing of numbers or other information and that is closely linked to the previous item.

The analysis tools of the database started to be developed in the 60s and it was in 1970 that IBM introduced the concept of relational archive and SQL (Structured Query Language) and in 1980, the Oracle released Oracle 2 and that together revolutionized the way numerical data were treated.

It was only at the end of the 90s that Google came to revolutionize the way we get words in Internet (Google search was a great success in 1998) and this gap of nearly 40 years partly explains why only now the tools that deal with words begin to appear.

Still on the trace of the hardware development, we will have to refer to the evolution of the communication speed. The Internet2 with a theoretical speed of 10Gbps (this means that a DVD with a capacity of 4,7Gb can be transferred in 3,5 seconds); the “IPv6” address protocol will enable 79 octillion times the current capacity of addressing the “IPv4” protocol and, at last, the 5G technology, whose rollout in Brazil is estimated to occur in 2018, has 10 times the speed of 4G, just to give three examples.

According to IBM information, 88% of existing information today and which are available in internet are still inaccessible to the digital and statistical addressing (which was the big leap generated in the 60’s with the database), since they talk about words, images and sounds. Also, according to it, Watson currently has the capacity to process (and interpret) 800 million documents in just one second!

All this technological progress allowed the research and the development industry to focus on solving the difficulties that we have (so far) in the digital processing of the so-called “human natural language”, besides the simple fast word searcher.

There are dozens of statistical algorithms (some have been existing for years) such as: decisions trees, “clustering”, “ensemble”, regressions, “NaiveBayes”, etc, associated to the dictionaries of meanings and synonyms, having allowed the computers to interpret the words inserted in a given context, and, as a consequence, interpret the meaning of the whole text.

These or other (no matter which) algorithms allow some corrections in their responses, so that, as the results are displayed, the system will be improved and the accuracy level of the responses will be increased. These corrections can be done by human beings (“supervised machine learning”) or automatically by the computer through statistical analysis. (unsupervised machine learning”)

The example I often use refers to how a machine (set of hardware and software) can correctly seek and differentiate in the context when a lawyer searches for the word “mandado” (warrant or ordered). Let us take as an example two electronically stored texts and in one of them we find the sentence: the judge had ordered the carrier to look for his clothes at his house, and, in the other one, the sentence: the judge issued a search and seizure warrant in the house, and the lawyer searches for words containing judge, warrant and house. Only through algorithms and analysis of the rest of the text that a “smart searcher” will differentiate the two sentences and bring the correct one.

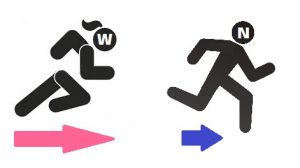

As I have already mention, in my humble opinion, the great revolution that is happening in law (and in all other human professions) is the capacity to digital and statistically handle the words, that is, “The words are coming closer to the numbers”!